SIFT+BoW图像搜索

目前在补图像处理的东西,公司最近也有一个图像搜索的任务,这篇文章先把大致方法和流程记录一下,之后计划在图像处理学习完之后,出一系列文章,把有关内容组织一下更新出来。本篇博客十分实用,代码可以说拿走就能用!!!

1、建立bags of words

import argparse as ap

import cv2

import numpy as np

import os

from sklearn.externals import joblib

from scipy.cluster.vq import *

from sklearn import preprocessing

import matplotlib.pyplot as plt

train_path = "data\\" #训练样本文件夹路径

training_names = os.listdir(train_path)

numWords = 64 # 聚类中心数

image_paths = [] # 所有图片路径

ImageSet = {}

for name in training_names:

ls = os.listdir(train_path + "/" + name)

print(ls, name)

ImageSet[name] = len(ls)

for training_name in ls:

image_path = os.path.join(train_path + name, training_name)

image_paths += [image_path]

# Create feature extraction and keypoint detector objects

sift_det=cv2.xfeatures2d.SIFT_create()

# List where all the descriptors are stored

des_list=[] # 特征描述

for name, count in ImageSet.items():

dir = train_path + name

print("从 " + name + " 中提取特征")

trainNum = count

for item in image_paths:

filename =item

img=cv2.imread(filename)

kp,des=sift_det.detectAndCompute(img,None)

des_list.append((filename.split('\\')[2], des))

descriptors = des_list[0][1]

print('生成向量数组')

for image_path, descriptor in des_list[1:]:

descriptors = np.vstack((descriptors, descriptor)) #将多个数据压在一起

# Perform k-means clustering

print ("开始 k-means 聚类: %d words, %d key points" %(numWords, descriptors.shape[0]))

voc, variance = kmeans(descriptors, numWords, 1)

# Calculate the histogram of features

im_features = np.zeros((len(image_paths), numWords), "float32")

for i in range(len(image_paths)):

words, distance = vq(des_list[i][1],voc)

for w in words:

im_features[i][w] += 1

# Perform Tf-Idf vectorization

nbr_occurences = np.sum( (im_features > 0) * 1, axis = 0)

idf = np.array(np.log((1.0*len(image_paths)+1) / (1.0*nbr_occurences + 1)), 'float32')

# Perform L2 normalization

im_features = im_features*idf

im_features = preprocessing.normalize(im_features, norm='l2')

print('保存词袋模型文件')

joblib.dump((im_features, image_paths, idf, numWords, voc), "bow.pkl", compress=3)

2、搜索相似图像

#python search.py -i query/target.jpg

import argparse as ap

import cv2

import imutils

import numpy as np

import os

from sklearn.externals import joblib

from scipy.cluster.vq import *

from sklearn import preprocessing

import numpy as np

from pylab import *

mpl.rcParams['font.sans-serif'] = ['SimHei']

from PIL import Image

from imutils.feature.rootsift import RootSIFT

# Get the path of the training set

parser = ap.ArgumentParser()

parser.add_argument("-i", "--image", help="Path to query image", required="True")

args = vars(parser.parse_args())

# Get query image path

image_path = args["image"]

# Load the classifier, class names, scaler, number of clusters and vocabulary

im_features, image_paths, idf, numWords, voc = joblib.load("bow.pkl")

# Create feature extraction and keypoint detector objects

sift_det=cv2.xfeatures2d.SIFT_create()

# List where all the descriptors are stored

des_list = []

im = cv2.imread(image_path)

gray = cv2.cvtColor(im, cv2.COLOR_RGB2GRAY)

kp, des = sift_det.detectAndCompute(gray, None)

des_list.append((image_path, des))

# Stack all the descriptors vertically in a numpy array

descriptors = des_list[0][1]

#

test_features = np.zeros((1, numWords), "float32")

words, distance = vq(descriptors,voc)

for w in words:

test_features[0][w] += 1

# Perform Tf-Idf vectorization and L2 normalization

test_features = test_features*idf

test_features = preprocessing.normalize(test_features, norm='l2')

score = np.dot(test_features, im_features.T)

rank_ID = np.argsort(-score)

# Visualize the results

figure('基于OpenCV的图像检索')

subplot(5,5,1)#

title('目标图片')

imshow(im[:,:,::-1])

axis('off')

for i, ID in enumerate(rank_ID[0][0:20]):

img = Image.open(image_paths[ID])

#gray()

subplot(5,5,i+6)

imshow(img)

title('第%d相似'%(i+1))

axis('off')

show()

运行的时候执行以下语句

python search.py -i <图像路径>

例如:python search.py -i 1.png

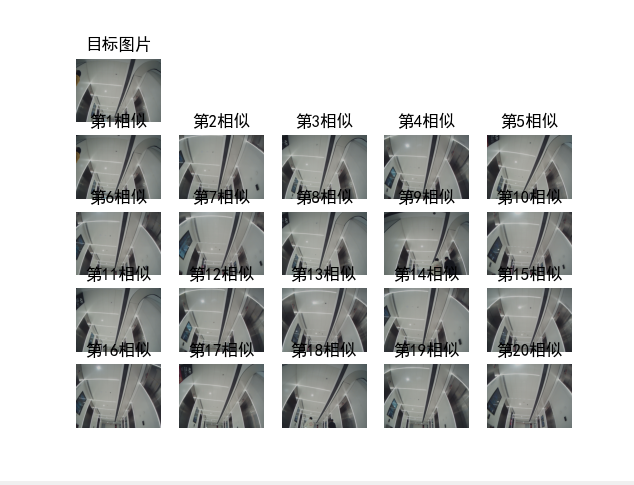

3、结果展示

项目中做了可视化的部分进行展示,如下图所示:

结果还是很好的,搜索速度也很快,十分的好用啊!