一文读不懂GoogleNet

这次介绍一下GoogLeNet以及复现一波Inception

一、介绍

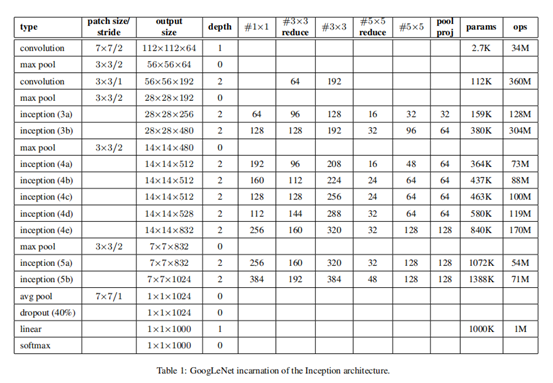

GoogLeNet是Google在2014年发的论文,名字上虽然有对LeNet致敬的意思,但是结构上已经没啥关系了。其中主要的是Inception块,通过叠加多个小的卷积以及并联不同尺寸的卷积,它还有之后的v1,2,3系列在比赛上取得了很好的成绩。

二、原理

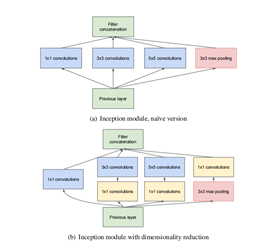

1)inception块。结构如下所示。上面的结构是原始的,但是发现在低维度上学习特征的时候,也能有不错的学习效果,而且还能大大减少计算量,因此就采用了下面的结构,这也是后来InceptionV1block中使用的结构。

2)1x1卷积。论文中参照NiN的结构,大量使用1x1的卷积,一方面提升非线性学习能力,另一方面可以降低维度减少计算量。这里也总结一下1x1卷积的作用,主要有三个升降维度、

增加非线性以及channel间的pooling操作。

三、网络结构及复现

import torch.nn as nn

import torch.nn.functional as F

import torch

class BN_Conv2d(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size, stride, padding, dilation=1, bias=True):

super(BN_Conv2d, self).__init__()

self.seq = nn.Sequential(

nn.Conv2d(in_channels, out_channels, kernel_size=kernel_size, stride=stride,

padding=padding, dilation=dilation, bias=bias),

nn.BatchNorm2d(out_channels)

)

def forward(self, x):

return F.relu(self.seq(x))

class Inception_builder(nn.Module):

"""

types of Inception block

"""

def __init__(self, block_type, in_channels, b1_reduce, b1, b2_reduce, b2, b3, b4):

super(Inception_builder, self).__init__()

self.block_type = block_type # controlled by strings "type1", "type2"

# 5x5 reduce, 5x5

self.branch1_type1 = nn.Sequential(

BN_Conv2d(in_channels, b1_reduce, 1, stride=1, padding=0, bias=False),

BN_Conv2d(b1_reduce, b1, 5, stride=1, padding=2, bias=False) # same padding

)

# 5x5 reduce, 2x3x3

self.branch1_type2 = nn.Sequential(

BN_Conv2d(in_channels, b1_reduce, 1, stride=1, padding=0, bias=False),

BN_Conv2d(b1_reduce, b1, 3, stride=1, padding=1, bias=False), # same padding

BN_Conv2d(b1, b1, 3, stride=1, padding=1, bias=False)

)

# 3x3 reduce, 3x3

self.branch2 = nn.Sequential(

BN_Conv2d(in_channels, b2_reduce, 1, stride=1, padding=0, bias=False),

BN_Conv2d(b2_reduce, b2, 3, stride=1, padding=1, bias=False)

)

# max pool, pool proj

self.branch3 = nn.Sequential(

nn.MaxPool2d(3, stride=1, padding=1), # to keep size, also use same padding

BN_Conv2d(in_channels, b3, 1, stride=1, padding=0, bias=False)

)

# 1x1

self.branch4 = BN_Conv2d(in_channels, b4, 1, stride=1, padding=0, bias=False)

def forward(self, x):

if self.block_type == "type1":

out1 = self.branch1_type1(x)

out2 = self.branch2(x)

elif self.block_type == "type2":

out1 = self.branch1_type2(x)

out2 = self.branch2(x)

out3 = self.branch3(x)

out4 = self.branch4(x)

out = torch.cat((out1, out2, out3, out4), 1)

return out

class InceptionNet(nn.Module):

def __init__(self):

super(InceptionNet, self).__init__()

def forward(self, x):

return

class GoogleNet(nn.Module):

"""

Inception-v1, Inception-v2

"""

def __init__(self, str_version, num_classes=1000):

super(GoogleNet, self).__init__()

self.block_type = "type1" if str_version == "v1" else "type2"

self.version = str_version # "v1", "v2"

self.conv1 = BN_Conv2d(3, 64, 7, stride=2, padding=3, bias=False)

self.conv2 = BN_Conv2d(64, 192, 3, stride=1, padding=1, bias=False)

self.inception3_a = Inception_builder(self.block_type, 192, 16, 32, 96, 128, 32, 64)

self.inception3_b = Inception_builder(self.block_type, 256, 32, 96, 128, 192, 64, 128)

self.inception4_a = Inception_builder(self.block_type, 480, 16, 48, 96, 208, 64, 192)

self.inception4_b = Inception_builder(self.block_type, 512, 24, 64, 112, 224, 64, 160)

self.inception4_c = Inception_builder(self.block_type, 512, 24, 64, 128, 256, 64, 128)

self.inception4_d = Inception_builder(self.block_type, 512, 32, 64, 144, 288, 64, 112)

self.inception4_e = Inception_builder(self.block_type, 528, 32, 128, 160, 320, 128, 256)

self.inception5_a = Inception_builder(self.block_type, 832, 32, 128, 160, 320, 128, 256)

self.inception5_b = Inception_builder(self.block_type, 832, 48, 128, 192, 384, 128, 384)

self.fc = nn.Linear(1024, num_classes)

def forward(self, x):

out = self.conv1(x)

out = F.max_pool2d(out, 3, 2, 1)

out = self.conv2(out)

out = F.max_pool2d(out, 3, 2, 1)

out = self.inception3_a(out)

out = self.inception3_b(out)

out = F.max_pool2d(out, 3, 2, 1)

out = self.inception4_a(out)

out = self.inception4_b(out)

out = self.inception4_c(out)

out = self.inception4_d(out)

out = self.inception4_e(out)

out = F.max_pool2d(out, 3, 2, 1)

out = self.inception5_a(out)

out = self.inception5_b(out)

out = F.avg_pool2d(out, 7)

out = F.dropout(out, 0.4, training=self.training)

out = out.view(out.size(0), -1)

out = self.fc(out)

return F.softmax(out)

if __name__=='__main__':

model = GoogleNet('v1')

print(model)